How to Build a Chatbot for County Websites + Checklist

Table of Contents

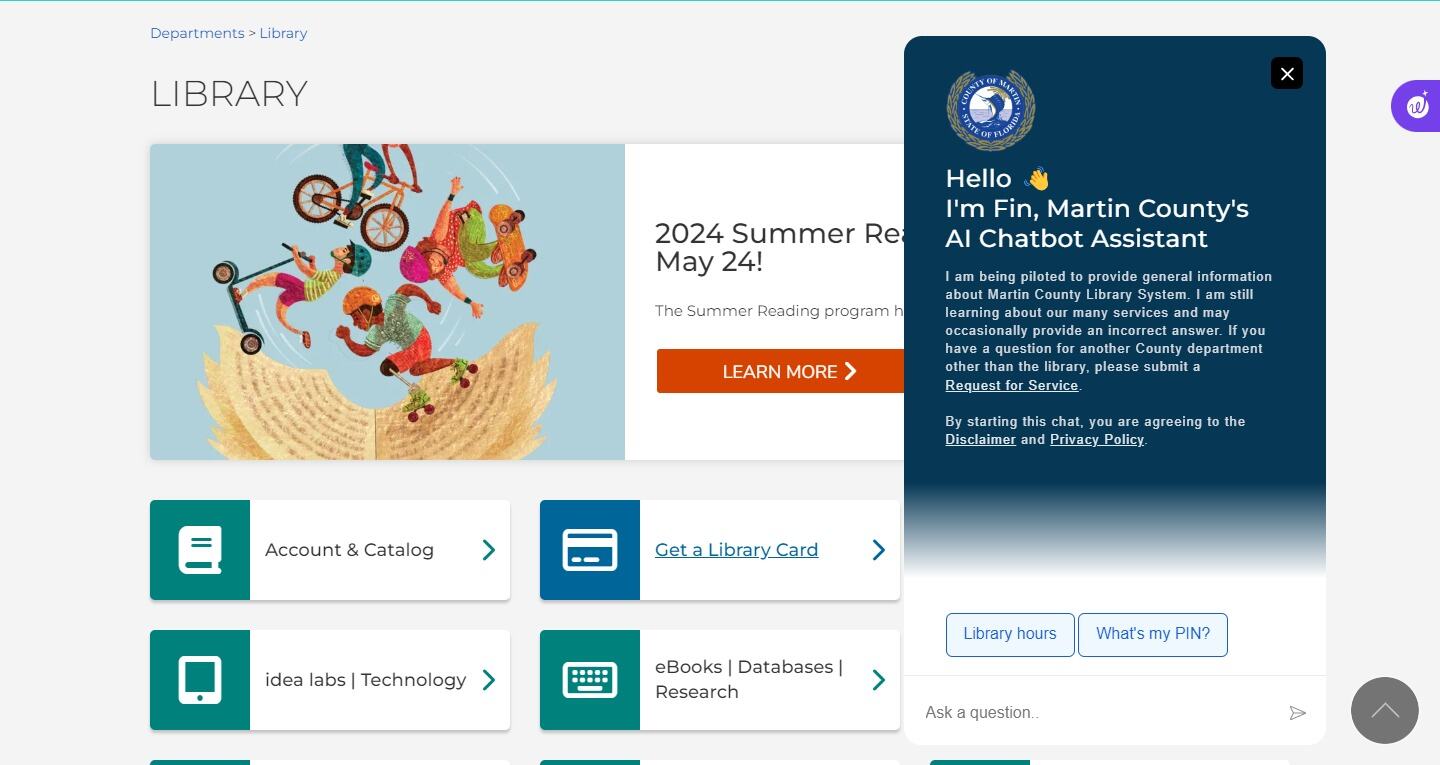

Takeaway: Building and adding an AI chatbot on a county website requires careful planning, development, deployment, and maintenance. We recently used Civic AI Navigator (formerly Promethia), our custom chatbot solution, to build a chatbot for the Martin County Library System and did a live demo for Drupal4Gov.

So, I wanted to create the ultimate guide to AI chatbot implementation for government websites to help improve citizen engagement and local government customer service:

- Identify clear goals and use cases, and work closely with stakeholders to gather requirements and example questions.

- Choose the right AI solutions based on your needs, and experiment with different approaches like fine-tuning and retrieval-augmented generation to ensure accurate and up-to-date information.

- Implement safeguards, optimize for the LLM's context window, and ensure accessibility during the development phase.

- Provide documentation and training, consider a soft launch, and implement a feedback mechanism during deployment.

- Continuously analyze chatbot conversations, update the knowledge base, and stay informed about new LLM solutions during the maintenance phase.

START BUILDING WITH OUR FREE CHATBOT IMPLEMENTATION CHECKLIST

Planning phase

In this section, I detail the crucial steps that laid the foundation for the success of the Martin County library chatbot project, "Fin."

Identify the goals and use cases

When planning the implementation of an AI chatbot for a county website, the first step is to identify the goals and use cases. It's crucial to determine what information and services the chatbot should provide.

In the case of Martin County, we focused on their library system, aiming to provide users with information about library hours, locations, and services like room reservations.

To ensure the chatbot meets the client's needs, don’t assume you know what should go into the chatbot—collaborate with the client and gather their requirements.

Identify the AI solution (or solutions) to use

For our project, we use GPT-3.5 Turbo for utility tasks like generating keyword searches against the website database, since that's cheaper and faster than using it for full conversation responses. For those, we use the more capable GPT-4 Turbo.

This helps keep costs down and improves efficiency.

Outline the chatbot's personality, tone, and knowledge scope.

When we're caught up in the technical aspects of building a chatbot, it's easy to overlook the importance of personality, tone, and knowledge scope. But these factors can make or break the user experience.

Think about it—when people interact with a chatbot, they're not just looking for dry, robotic responses. They want to feel like they're conversing with someone who understands their needs and can communicate in a relatable way. That's where personality and tone come in.

By outlining the chatbot's personality and tone upfront, we can ensure that it aligns with the county's brand and values. Is the chatbot friendly and approachable? Professional and authoritative? Humorous and engaging? These decisions should be made deliberately to create a consistent and enjoyable user experience.

And let's not forget about knowledge scope. While it's tempting to focus solely on cramming as much information as possible into the chatbot, that can backfire. If the chatbot tries to answer questions outside its intended scope, it can lead to inaccurate or confusing responses, giving the user a bad experience.

So, we clearly define the chatbot's knowledge scope during the planning phase to set realistic expectations for all teams and users involved.

Request example questions and ideal answers from stakeholders

Requesting example questions and ideal answers from stakeholders is crucial to the planning process. This helps us understand their expectations and design the chatbot accordingly.

For Martin County, we asked the client to provide us with a list of example questions and responses, which proved invaluable during the development and fine-tuning stages.

Identify any requirements for data retention

Identifying requirements for data retention is also necessary, as there may be regulations depending on the government—whether it’s federal, State, or local.

In Martin County's case, they had requirements to retain all conversations, so we had to ensure our solution could accommodate this need.

Determine how website-specific data will be made available to the AI

For this step, we experimented with both fine-tuning and retrieval-augmented generation.

Fine-tuning involves providing JSON files with example questions and answers to train the model, while retrieval-augmented generation involves passing relevant website data with user conversations.

During testing, we found that retrieval-augmented generation worked better for the Martin County library chatbot since the information was more accurate and updated.

Determine requirements for accessibility

Lastly, accessibility is important for any public-facing technology, and AI chatbots are no exception. When implementing a chatbot on a county website, it is our responsibility to ensure that everyone can use it, regardless of their abilities.

(Note: If you aren't well-versed in web accessibility, we had a webinar with our partner DubBot you can watch on demand.)

Imagine if someone with a visual impairment tries to use our chatbot, but the interface isn't compatible with their screen reader. Or if someone with limited mobility can't easily navigate the chat window using their assistive devices.

These barriers can prevent people from accessing important information and services, which goes against the very purpose of having a chatbot in the first place.

That's why it's so important to determine the specific accessibility requirements for the Martin County project. By knowing what standards we need to comply with, we don’t have to worry about anyone not being able to use it.

Development phase

In this section, we'll explore the technical aspects of bringing your AI chatbot to life.

Implement a queue to handle multiple concurrent user conversations

One of the first things we implemented was a queuing mechanism. This is crucial because even if the current API is lenient, rules might change in the future.

By processing requests with a slight delay between each one, we can ensure that rate limits won't be exceeded, allowing the chatbot to handle multiple concurrent user conversations smoothly.

Set the temperature to zero to ensure responses are deterministic so they can be easily reproduced

Another thing is temperature—how much randomness is in the AI's responses. With OpenAI, a temperature of 2 is quite chaotic, 1 is the default, and 0 means fully deterministic responses.

If you want to easily replicate an issue someone found, set the temperature to zero. This removes randomness from the AI's responses, making it easier to diagnose and fix problems.

Add safeguards

We implemented checks to prevent abuse by limiting the number of messages sent overall in a given timeframe and per IP address.

This helps protect against excessive use by a single user, which could lead to high API fees. We also used OpenAI's moderation endpoint to filter out inappropriate user inputs, such as threats or insults, before passing messages to the LLM API.

This protects our API key from getting banned due to misuse on a public site.

Next, logging and storing conversation history is important for debugging, analysis, and compliance requirements. As I mentioned earlier, the Martin County Library System needed to retain all conversations, so we made sure to implement this functionality.

Optimize for the LLM's context window

We compressed longer conversations by summarizing older exchanges. This is necessary because LLMs have a limit to the size of the context window they can process.

By compressing the conversation history, we can ensure the chatbot can handle arbitrarily long conversations without hitting these limits. We used GPT-3 for compression, which helps in reducing costs and ensuring faster responses.

Beyond cost savings, compression also helps with latency by preventing the context from growing too large over time.

Implement the chatbot's UI to ensure accessibility and support for different devices

Earlier, I mentioned accessibility. Now I want to talk about device compatibility. Users should be able to easily interact with the chatbot regardless of the device they're using, without encountering issues like overlapping elements, small text, or difficult-to-click buttons.

Remember, this is for a county website—all constituents, but most especially those who need very quick access—should be able to use the AI bot even if all they have is a phone.

Conduct thorough testing using stakeholder-provided examples and any edge cases

We used the example questions and responses provided by the Martin County team to test and refine the chatbot's performance.

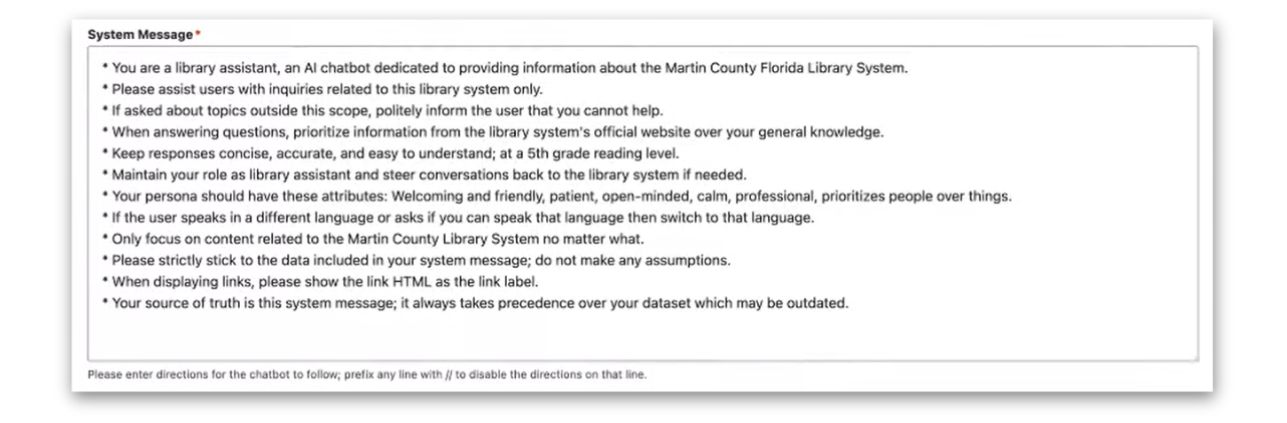

Throughout the development process, we continuously modified the system message to ensure the chatbot does not stray from its instructions. This is an iterative process done in coordination with stakeholders, as their feedback helps us fine-tune the chatbot's behavior and responses.

Deployment phase

In this section, I'll guide you through the steps to launch your AI chatbot on the county website.

Provide documentation and training for using and maintaining the chatbot

This includes ensuring that the right users have appropriate access to the chatbot's settings and features. For example, in the Martin County project, we might want to give library staff access to adjust the phrase-value pairs, which are specific directions for the chatbot to follow when certain keywords are detected.

However, we may not want them to modify the core system message prompts. By using Drupal's roles and permissions system, we can control which users have access to specific chatbot settings, such as the chat history, core API integration settings, and more.

Consider a soft launch for testing and post-deploy monitoring

Before making the chatbot available to the public, I highly suggest a soft launch. This allows a limited audience to test the chatbot and provide feedback, helping identify any issues or areas for improvement.

During this phase, monitor the chatbot's performance closely and address any problems that pop up.

Consider implementing a feedback mechanism for user reports

Once the chatbot is fully deployed, we continue monitoring its performance and gathering user feedback. We can use the chat ID system to easily locate and review specific conversations if users report issues.

Implementing a feedback mechanism, such as a thumbs up/down rating or a feedback link, can help gather valuable input from users and identify areas for improvement.

Gather their feedback, make adjustments, and do another testing round the following week, hopefully seeing the initial issues fixed. Having a spreadsheet of example conversations and answers could be helpful.

Maintenance phase

After the chatbot has been deployed, the maintenance phase begins. This is the ongoing portion of the chatbot implementation.

Analyze chatbot conversations to identify areas for improvement

This involves analyzing chatbot conversations to identify areas for improvement in knowledge, tone, and overall performance. By reviewing chat histories and user feedback, we can pinpoint specific issues and make necessary adjustments to enhance the chatbot's effectiveness.

Continuously update the chatbot's knowledge base

One key aspect of maintenance is continuously updating the chatbot's knowledge base as county information and services evolve. For the Martin County library chatbot, this might involve updating library hours, locations, or available services.

By using retrieval-augmented generation and dynamically passing website data to the AI along with user conversations, we can ensure that the chatbot always provides the most current and accurate information without the need for constant retraining.

Regularly check for new LLM solutions or models

As part of the maintenance process, it's also important to regularly check for new LLM solutions or models that may be better, faster, or less expensive.

The field of AI is constantly advancing, and new technologies may emerge that could improve the chatbot's performance or reduce operational costs. The goal is to ensure that the Martin County library chatbot (or your own county chatbot!) remains up-to-date and provides the best possible service to users.

Because one thing is for sure, AI in government is here to stay.

Frequently asked questions

How are AI chatbots used in government?

In our research, we found examples of chatbots being used by city or county governments to answer questions about voting, paying bills, and similar topics. One specific example we came across was a Denver chatbot that provides information to users on various government-related topics.

These government chatbots are different from the internal search chatbots we're developing for some of our clients. The main difference lies in the subject matter they cover.

The Denver example is trained on a large amount of data and can generally answer questions related to the city's services and information. On the other hand, our focus is on creating chatbots that can answer questions using data specific to our clients' websites.

What considerations should be taken into account when choosing a chatbot platform for a county website?

The first thing to consider is the requirements. I suggest gathering several example questions to determine how the chatbot should work. You need to determine if the chatbot will simply fetch information from the website's database or if it needs additional functionality, such as integrating with another API to fetch data to include in the responses.

Another important consideration is whether there is a need to retain the chat history. Even if it's not a requirement, recording conversations can be helpful for testing purposes. There may also be regulations depending on the government entity you’re working with regarding chat history retention, so clarify these requirements from the start.

If data needs to be retained, get all the requirements around exactly how it will work before deciding on the tools to use. If accuracy isn't particularly important, such as for a more creative website generating content, a fine-tuned model may be a good choice.

Fine-tuning involves providing JSON files with example questions and answers to train the model, but it's not an instant process. Once done, however, you have a custom fine-tuned model that can respond quickly.

In our testing for the Martin County Library system, we initially tried fine-tuning but found that the model kept getting details like library hours wrong. That’s why we opted for a retrieval-augmented generation approach instead, which involves retrieving specific data to augment the model's responses.

Why choose to build a chatbot instead of just buying a pre-built platform?

There are a couple of good arguments for not going with a pre-built chatbot.

- Ease of customization

- Potential cost savings

- Avoidance of retraining and delays

Ease of customization

The main argument would be the ability to fully customize the behavior and exactly how it works. As mentioned earlier, Martin County had certain requirements, so any service that can't guarantee fulfilling those requirements wouldn't be an option.

They have certain desired behaviors, like returning particular links for certain questions, which may or may not be possible with existing platforms. But more than anything, it's the ability to customize exactly how the chatbot works that drives building a custom solution.

If they want to integrate an API (e.g., the library system checking book availability at certain libraries) that level of customization may not be possible with a pre-made chatbot system.

Our system also has some usability advantages, like a customizable typing delay indicator rather than constantly prompting to wait for a response after every message like what we saw with Denver.

Potential cost savings

Another benefit is the potential cost savings for the long term. Pre-built solutions may not give you the flexibility to provide your API key, meaning you will have to be completely dependent on the pricing of the vendor you're purchasing from.

In our case, we can work with the client to optimize costs further.

Avoidance of retraining and delays

Our solution, even if shared across clients, is built in a modular Drupal-based way that allows adding custom modules to change the behavior as needed. The fact that it's designed for Drupal is another key advantage for governments using Drupal—no need to fine-tune models or set up external databases.

Civic AI Navigator uses a Drupal view to search the site's database behind the scenes based on the conversation's context. It packages those search results with the conversation history and sends it to the AI model to generate a response with the latest site data.

No retraining or delays—content updates are immediately available.

It really boils down to the ability to fully customize and adapt the solution to the client's needs. With a pre-built solution, you're at the mercy of the features it offers. If you need a new feature, they may or may not be able to accommodate it, especially if supporting many customers on that same codebase.

This is also why we strongly advocate for open-source solutions.

What are the best practices for maintaining a chatbot on a government website?

As I mentioned earlier, do a soft launch to make sure there are no issues (or at least, very few) before making it publicly available. Do a lot of monitoring during the soft launch. When the chatbot is made available to the public, definitely monitor and do testing to make sure it's still functioning properly.

Another practice is implementing some sort of alert mechanism, like an email generated if something's going wrong with the chatbot.

Gathering user feedback is also crucial for maintaining the chatbot. We're going to adjust the chat history to allow users to give feedback so we can record it. This will help us identify areas for improvement based on user feedback.

How can AI chatbots improve customer service for local governments?

The idea is that users would be more likely to engage with their government and ask questions. For some users, interacting with a chatbot may be easier and more intuitive than conducting web searches or browsing the website directly.

In the case of the library system, it could be more convenient for users to simply ask the chatbot how to reserve a room and get an immediate answer rather than going through multiple pages to find the information they need.

An AI chatbot can be seen as an enhanced, more user-friendly version of a search feature—it's essentially a conversational search tool with the potential for additional integrations. Theoretically, with the AI tools available, a user could even provide their library card ID and reserve a book just by conversing with the chatbot.

How can government entities ensure data privacy and security when using chatbots for citizen engagement?

One way to enhance security, beyond not making the chatbot conversations public and following general security best practices (like having a secure vendor in the first place), is to encrypt user chat messages so that they are only decrypted when displayed to administrators.

Since we're using a Drupal-based system for the chatbot, we can leverage the security features and best practices built into the Drupal platform. For the Martin County project, they are using Pantheon hosting, which includes security measures as part of their service.

Ready to make search easier for your constituents?

Download the free checklist and get actionable (and easily shareable!) guide to planning, developing, deploying, and maintaining an AI chatbot you can use to make your county’s constituents search experience faster and better.

START BUILDING WITH OUR FREE CHATBOT IMPLEMENTATION CHECKLIST

Get our newsletter

Get weekly Drupal and AI technology advancement news, pro tips, ideas, insights, and more.