Understanding VPAT® Conformance Levels: A Practical Guide

Table of Contents

Takeaway: When evaluating VPATs®, don't just look for checkboxes and perfect compliance scores. A thorough VPAT® evaluation requires understanding both basic and complex conformance documentation, recognizing red flags, and assessing the vendor's overall commitment to accessibility.

What matters most is whether the technology will work effectively for all users in real-world situations, and that means looking beyond conformance levels to consider practical implementation, user impact, and long-term accessibility support.

Schedule a consultation for VPAT® evaluation support

You’re part of a federal procurement team evaluating technology accessibility, and you received a VPAT® showing "Partially Supports" across multiple criteria. But what does this really mean? Does "Partially Supports" indicate 80% conformance or 20%? And how can you tell the difference?

Note: For background on what a VPAT is and its role in federal procurement, see our public sector guide to VPATs®. Today, we're diving into understanding and evaluating conformance levels.

The conformance evaluation challenge

You face an important challenge when reviewing VPATs®: Interpreting conformance levels effectively. As I mentioned during our roadmap to VPAT® webinar, "Any ACR that shows total conformance and is more than a very basic technology is suspect."

This insight reveals that evaluating accessibility conformance requires more nuance than simply looking for "Supports" across the board.

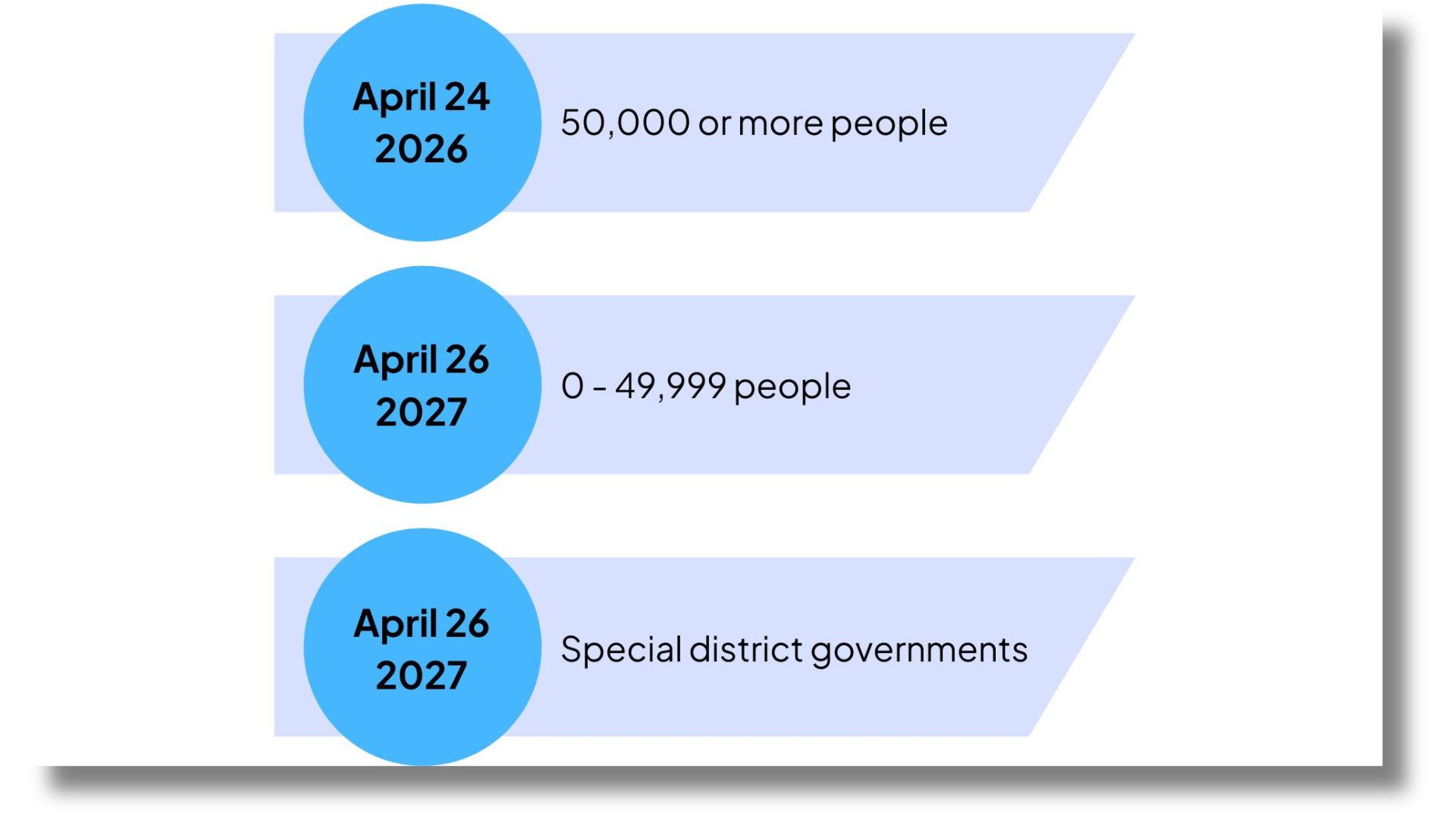

Why understanding conformance levels matters now

The Department of Justice's 2024 rule has put new pressure on federal agencies to ensure technology purchases meet accessibility standards. With compliance deadlines approaching in 2026 and 2027, procurement teams need a practical framework for evaluating conformance levels and making informed decisions.

This guide will walk you through:

- How to interpret each conformance level with real examples

- A practical framework for evaluating complex conformance documentation

- Red flags that indicate potential conformance issues

- How to look beyond basic conformance levels in your assessment

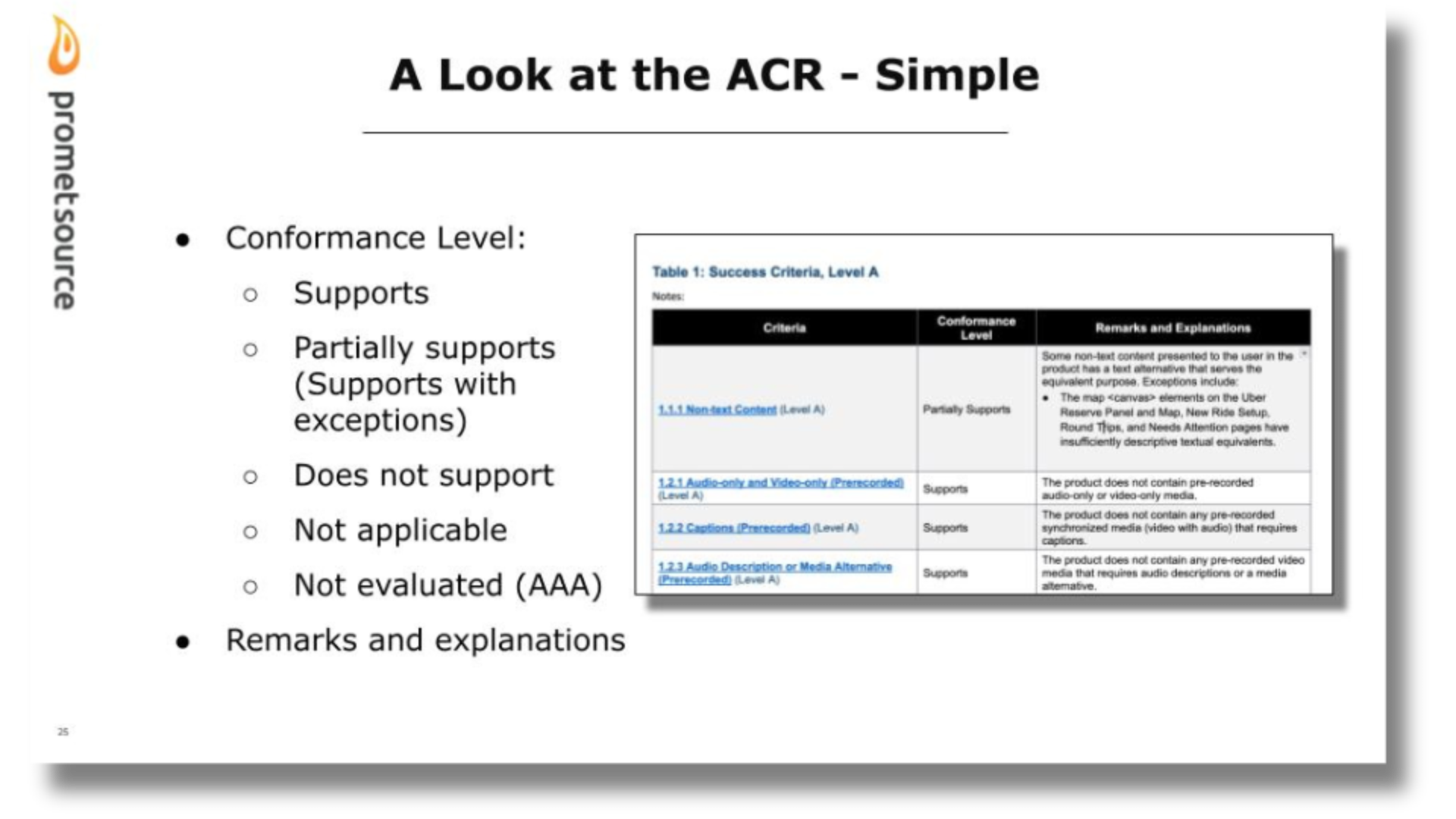

The five conformance levels

When evaluating a VPAT®'s conformance levels, you'll encounter five different levels of conformance in the Conformance Level column.

Let's explore these through a real procurement scenario: A county fire department must purchase software for tracking emergency calls and managing firefighter training certifications.

They've identified three potential vendors, each providing a VPAT® for evaluation.

"Supports"

When a feature fully meets accessibility requirements, you'll see "Supports." For example, if the emergency call logging interface "Supports" keyboard navigation, it means firefighters can input critical incident details using only their keyboard—an essential capability in high-pressure situations or when using computers in various emergency response settings.

“Supports” may also be used if the criteria is possible in the product but not used. For example, if the software doesn’t use videos, success criteria of “1.2.1 Audio-only and Video-only (Prerecorded)” may still be given a “Supports” designation.

"Partially Supports"

This conformance level requires scrutiny because it can indicate anywhere from 50% to 99% conformance. In our example, one vendor's VPAT shows "Partially Supports" for error identification. The remarks column reveals:

"Where the product automatically detects input errors, the item or field in error is sometimes identified and described to the user in text. Exceptions include:

- Error messages in the New Ride Setup and Round Tips pages lack clear identification

- Some form errors aren't programmatically associated with their fields"

For the fire department, this means some critical error messages might not be communicated to users—a potential issue when logging time-sensitive emergency data.

"Does Not Support"

If multimedia content documenting incident protocol training has no captions, this limits access for firefighters who need to review procedures in noise-sensitive environments or those who are deaf or hard of hearing.

While all accessibility issues matter, the priority level depends on how critical that feature is for core emergency response functions.

"Not Applicable"

This indicates that standard doesn't apply to the product. In our fire department software evaluation, we might see "Not Applicable" for pre-recorded video requirements if the software is purely form-based for incident logging. However, this level warrants a clear explanation, so be wary if there is no additional explanation for this designation.

"Not Evaluated"

This typically appears for WCAG Level AAA criteria. While important to note, Level AAA conformance isn't required for federal procurement under Section 508.

Complex conformance documentation

Let’s say our fire department is using a more complex edition of VPAT®. For example, in the description that follows, the multiple component types would be found in the 508, EU, and INT editions of the VPAT® but not in the simple WCAG version.

Which version is chosen is dependent on who the software is marketed to.

Breaking down component-specific conformance

Let's say their system includes:

- Web-based emergency call logging interface

- Desktop application for managing training certifications

- Mobile app for field updates

- PDF report generation capability

| Component Type | Conformance Level | Remarks and Explanations |

|---|---|---|

| Web Interface | Web: Partially Supports | The emergency call logging interface keyboard navigation works throughout most screens, with exceptions in the dispatch queue management interface. |

| Desktop Software | Software: Partially Supports | The certification management system supports screen readers but lacks some keyboard shortcuts for advanced features. |

| Electronic Documents | Electronic Docs: Supports | Generated PDF reports include proper headings, alternative text for charts, and are properly tagged for screen reader access. |

The remarks section in more sophisticated VPATs® becomes particularly important. For example, a detailed remarks section might look like this:

| Criteria | Conformance Level | Remarks and Explanations |

|---|---|---|

| 3.3.1 Error Identification (Level A) | Web: Partially Supports |

Web: Where the product automatically detects input errors, the item or field in error is sometimes identified and described to the user in text. Specific locations: Emergency Response Form: All validation errors are properly identified Dispatch Queue: Some form errors aren't programmatically associated with their fields Training Record Updates: Error messages properly associated with fields |

Components of complex conformance documentation

When lives depend on software accessibility—as with an emergency response system—understanding complex conformance documentation becomes doubly important. Here's what to focus on and why:

1. Component-specific evaluations

In emergency response software, different components serve different functions. For example, a dispatch operator using a screen reader needs the web interface to be fully accessible, while field firefighters need the mobile app to work with voice commands when their hands are occupied.

That's why conformance documentation separates evaluations by component:

| Component Type | Why It Matters | What to Look For |

|---|---|---|

| Web Interface | Primary tool for dispatch operators who may rely on assistive technologies | Focus on keyboard navigation, screen reader compatibility, and error identification |

| Desktop Software | Used for longer-term tasks like certification management | Emphasis on complex workflows and data entry accessibility |

| Mobile Apps | Critical for field operations | Voice command support, high-contrast modes for sunlight visibility |

| Document Generation | Essential for reporting and accountability | Proper structuring for screen readers, accessible charts and tables |

2. Testing documentation

Testing information reveals how thoroughly the software has been evaluated under real-world conditions. For emergency response software, this is particularly important because:

- It shows whether the software was tested with the actual assistive technologies your team uses

- It reveals whether mission-critical functions (like dispatch alerts) were specifically evaluated

- It indicates whether testing included high-stress scenarios typical in emergency response

3. Issue tracking integration

When vendors integrate their bug tracking with the VPAT®, it demonstrates their commitment to accessibility. Look for:

- Specific issue IDs that prove problems are being actively tracked

- Timeline commitments for critical fixes

- Temporary solutions or known workarounds for known issues that could affect emergency operations

Common documentation patterns and why they matter

Understanding these patterns helps you evaluate whether a system will truly serve all your personnel effectively:

1. Hierarchical organization

When documentation is organized hierarchically, it helps you quickly assess impact on specific functions. For example:

| Pattern | Why It Matters | Example Impact |

|---|---|---|

| Feature-level Breakdown | Shows exactly where accessibility issues exist | Knowing that the dispatch queue has form field issues helps plan workarounds for affected staff |

| Component Relationships | Reveals how accessibility issues might cascade | Understanding that PDF generation pulls from all components helps assess report accessibility |

| Interface Mapping | Helps identify which user roles might be affected | Seeing that emergency response forms are fully accessible while training records have issues helps prioritize fixes |

2. Cross-referenced standards

Standards cross-referencing is crucial because:

- It helps ensure compliance with all relevant regulations

- It makes it easier to evaluate against your specific requirements

- It reveals gaps in compliance that might affect different user needs

3. Component interactions

Understanding how components interact helps you:

- Identify potential accessibility bottlenecks

- Plan for inclusive workflows across the entire system

- Ensure critical information flows remain accessible

For example, if the desktop app of our fire department software properly supports screen readers but the web interface it communicates with doesn't, this could create barriers for dispatch operators who need to quickly transfer information between systems.

Practical evaluation framework

With complex systems like emergency response software, you need a systematic approach to evaluate VPATs® effectively. Here's a practical framework that helps you move beyond just checking boxes to truly understanding whether a system will work for all your users.

Step 1: Evaluate the VPAT's® foundation

Before diving into specific conformance levels, assess the VPAT's® basic credibility:

| Warning Sign | Why It Matters | Example Red Flag |

|---|---|---|

| Age of Documentation | Software changes rapidly; old VPATs® may not reflect the current state | A VPAT® from 18 months ago doesn't reflect recent dispatch interface updates |

| Testing Methodology | Shows whether the evaluation was thorough and professional | Missing information about which screen readers were tested with the dispatch console |

| Testing Scope | Typically only a subset of representative screens or pages are tested | Depending on the quality of the scoping, it may or may not be a good representation of the existing accessibility issues |

Step 2: Analyze conformance patterns

Pay attention to how conformance levels are distributed across features:

| Pattern | What It Might Mean | Example |

|---|---|---|

| All "Supports" | Could indicate either a simple product or superficial evaluation | A complex dispatch system claiming full support across all criteria warrants skepticism |

| Mixed Levels | Often more realistic for complex systems | Different conformance levels for dispatch interface vs. reporting functions |

| Mostly "Partially Supports" | May indicate honest assessment but needs deeper investigation | Emergency response forms with partial support need investigation of specific limitations |

Step 3: Inspect critical features

For emergency response software, some features are non-negotiable. Focus your evaluation on mission-critical functions:

- Emergency response features

- How well does the dispatch queue support screen readers?

- Can keyboard-only users quickly enter emergency details?

- Are error messages immediately perceivable in high-stress situations?

- Time-sensitive functions

- Are alert notifications accessible through multiple means?

- Can field personnel quickly access critical information using mobile devices?

- Do status updates work with assistive technologies?

Step 4: Assess vendor commitment

A VPAT® is just one point in time. Evaluate the vendor's broader commitment to accessibility:

| Indicator | What to Look For | Why It Matters |

|---|---|---|

| Issue Resolution | Specific timeline commitments for fixes | Shows whether critical accessibility barriers will be addressed quickly |

| Development Process | How accessibility testing is integrated into updates | Indicates whether new features will maintain accessibility |

| Support Channels | Clear process for reporting accessibility issues | Ensures your team can get help when accessibility problems arise |

Step 5: Consider practical implementation

Finally, evaluate how the documented conformance levels will affect your actual operations:

| Consideration | Questions to Ask | Example Analysis |

|---|---|---|

| Staff Impact | Which team members rely on specific assistive technologies? | Will dispatch operators using screen readers be able to process emergency calls efficiently? |

| Training Needs | What workarounds need to be documented? | If the certification management system lacks keyboard navigation support, what alternative workflows are available? |

| Integration Requirements | How will accessibility issues affect other systems? | Does partial support for data export affect integration with emergency response protocols? |

Red flags in VPAT® assessment

Certain warning signs can indicate potential issues with the conformance documentation or the product's accessibility. Here's what to watch for:

Documentation red flags

| Warning Sign | What It Indicates | Example in Emergency Response Software |

|---|---|---|

| Vague Explanations | Might indicate superficial testing or lack of understanding | "Partially supports screen readers" without specifying which features work and which don't |

| Generic Responses | Could mean testing wasn't thorough | "Product supports keyboard navigation" without details about specific workflows |

| Missing Context | May indicate incomplete evaluation | No mention of how the dispatch alerts work with assistive technologies |

| Unexplained "Not Applicable" | Could hide untested features | Marking mobile accessibility as "N/A" without explaining why |

Content red flags

| Warning Sign | Why It's Concerning | Real-World Impact |

|---|---|---|

| Perfect Compliance | Rare for complex systems | A dispatch system claiming zero accessibility issues needs deeper investigation |

| Inconsistent Details | May indicate poor testing methodology | Different conformance levels reported for similar features across components |

| Missing Components | Could indicate incomplete testing | No mention of field reporting interface accessibility |

| Outdated Information | May not reflect the current version | Conformance levels from before major interface updates |

Critical assessment factors beyond conformance

While conformance levels are important, other factors can significantly impact accessibility in practice:

Vendor accessibility commitment

You also need to look at how the vendor approaches accessibility holistically. Sure, they may have a solid VPAT® this time around, but what happens if you run into some accessibility issues somewhere down the line? You need to ask:

- Do they integrate accessibility testing in development?

- Does product design include accessibility experts?

- How quickly do they address reported accessibility issues?

- How will they handle accessibility in updates?

- What is their roadmap for improving accessibility?

- How do they incorporate user feedback into accessibility improvements?

Real-world implementation

Think about the practical application in your environment:

| Factor | Questions to Consider | Example |

|---|---|---|

| Example | How will partial support affect different user roles? | Can visually impaired dispatch operators handle emergency calls efficiently? |

| Workarounds | Are temporary solutions practical for your team? | Is the alternate workflow for certification management realistic during busy periods? |

| Training Needs | What additional support will users need? | How will you train field personnel on accessible mobile features? |

Making the final assessment

Remember that a VPAT® is just one tool in your evaluation process. You can make a more informed decision about whether a product will truly serve all your users effectively by considering both conformance levels and these broader factors:

- Critical features vs. Nice-to-have features

- Focus on must-have accessibility for mission-critical functions

- Weigh the impact of accessibility issues on core operations

- User feedback and testing

- If possible, have your team test critical features

- Consider pilot testing with actual assistive technology users

- Support and documentation

- Evaluate accessibility documentation quality

- Assess vendor's accessibility support capabilities

- Compliance requirements

- Ensure conformance aligns with your organization's needs

- Consider future accessibility requirements

Learn how to navigate VPAT® requirements with our certified accessibility experts

Understanding and evaluating VPATs® requires both technical knowledge and practical experience. At Promet Source, our IAAP and Section 508 Trusted Tester certified accessibility experts have helped numerous government agencies and organizations effectively evaluate technology purchases using thorough VPAT® assessment.

Our accessibility experts can help you:

- Interpret complex conformance documentation

- Identify potential accessibility gaps

- Evaluate vendor accessibility commitment

- Plan for successful implementation

- Ensure compliance with accessibility requirements

Don't navigate VPAT® evaluation alone. Schedule a consultation with our certified accessibility professionals to ensure your technology purchases truly serve all your users.

Get our newsletter

Get weekly Drupal and AI technology advancement news, pro tips, ideas, insights, and more.